Introduction

Since its inception in 2006, AWS has grown into an extensive suite of cloud computing platforms, tools, and services, becoming the backbone of many businesses worldwide.

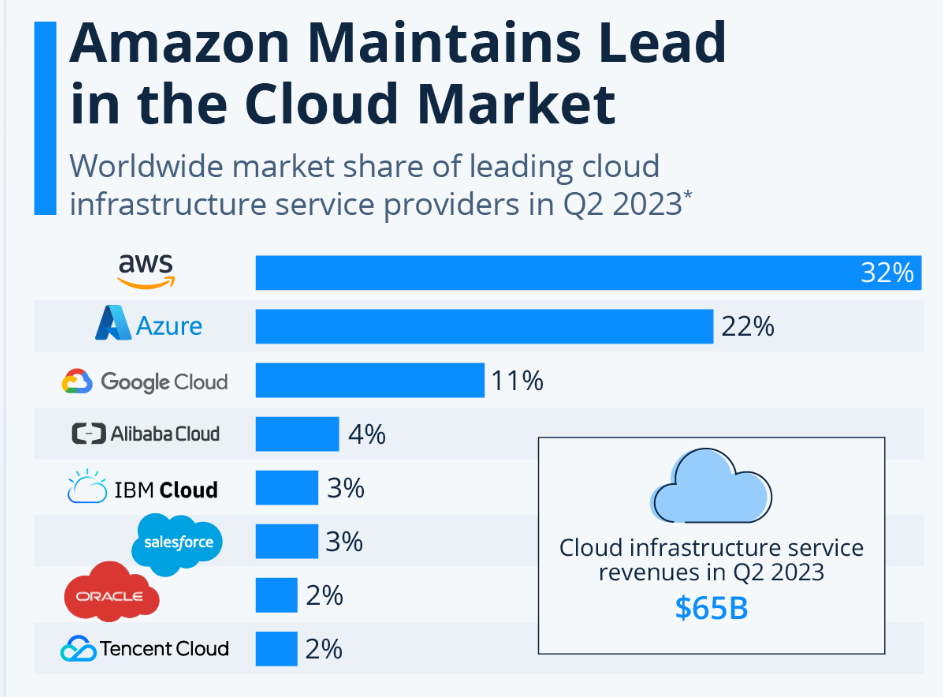

According to Statista, AWS holds an impressive 32% of the cloud computing market, outpacing the combined market share of all its competitors. This statistic highlights AWS’s influence in the industry and shows the importance of AWS’s expertise in the tech sector.

The search for talent in this domain is intense. Companies are constantly scouting for engineers, system architects, and DevOps professionals capable of navigating the intricacies of AWS-based infrastructures.

These individuals are expected to tackle complex technical challenges and harness the advanced capabilities of AWS technologies. In this landscape, proficiency in AWS has emerged as an important skill essential for tech professionals aiming to showcase their expertise in cloud computing.

The aim of this article is to assist hiring managers in crafting effective AWS technical interview questions. By focusing on relevant AWS interview questions, this article helps to equip hiring managers with the tools necessary to accurately assess software engineering candidates’ capabilities.

What is AWS?

Amazon Web Services (AWS) is a comprehensive and widely adopted cloud computing platform provided by Amazon. It offers a vast array of services and solutions catering to various digital and cloud computing needs. AWS is a dynamic and evolving platform that has become an essential tool in the toolbox of modern businesses and developers.

According to HGInsights, 2.38 million businesses will be buying AWS cloud computing services in 2024. This statistic shows the widespread adoption of AWS and also highlights its critical role in powering a significant portion of the business and technological landscape.

Key Features

- Extensive Service Range: AWS provides a wide range of services covering areas such as computing power, storage options, networking, databases, analytics, machine learning, Internet of Things (IoT), security, and enterprise applications, among others. This diversity allows it to cater to the unique requirements of different projects and industries.

- Global Infrastructure: AWS’s infrastructure is globally distributed and includes a large number of data centers across various geographical regions. This global presence ensures high availability, low latency, and robust disaster recovery capabilities.

- Scalability and Flexibility: One of the key features of AWS is its scalability. Businesses can scale their AWS services up or down based on demand, making it a highly flexible solution for varying workload requirements.

- Security and Compliance: AWS is known for its commitment to security. The platform offers strong security measures and compliance certifications, ensuring that data is protected and regulatory requirements are met.

- Innovative and Evolving: AWS is constantly evolving, with Amazon continuously adding new services and features. This commitment to innovation keeps AWS at the forefront of cloud computing technologies.

The popular companies using AWS are:

- Netflix: One of the largest users of AWS, Netflix relies on its vast array of services for streaming millions of hours of content to users worldwide.

- Airbnb: The hospitality service utilizes AWS for hosting its website and back-end operations, leveraging its scalability and reliability for managing large volumes of traffic.

- Samsung: AWS powers a significant portion of Samsung’s vast array of electronic services and devices, from mobile devices to home appliances.

- General Electric (GE): GE utilizes AWS for a wide range of its industrial and digital services, taking advantage of its advanced analytics and machine learning capabilities.

- BMW: The automotive giant uses AWS for its BMW Group Cloud, supporting its digital products and services.

Top 7 AWS Interview Questions

Let’s explore the top 7 AWS interview questions:

1. Upload a File to S3

| Task | Create a Python function to upload a file to a specified Amazon S3 bucket. |

| Input Format | Two strings: the first string is the file path on the local machine, and the second string is the name of the S3 bucket. |

| Output Format | A string representing the URL of the uploaded file in the S3 bucket. |

Suggested Answer

| import boto3

def upload_file_to_s3(file_path, bucket_name): s3 = boto3.client(‘s3’) file_name = file_path.split(‘/’)[-1] s3.upload_file(file_path, bucket_name, file_name) file_url = f”https://{bucket_name}.s3.amazonaws.com/{file_name}” return file_url |

Code Explanation

The function upload_file_to_s3 uses Boto3, the AWS SDK for Python. It starts by creating an S3 client. It then extracts the file name from the file path and uses the upload_file method to upload the file to the specified bucket. Finally, it constructs the uploaded file URL and returns it.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question is effective for evaluating a candidate’s skills in cloud-based storage solutions, particularly in automating and streamlining file storage operations using AWS services. |

2. Launch an EC2 Instance

| Task | Create a Python function using Boto3 to launch a new Amazon EC2 instance. |

| Input Format | Two strings: the first is the instance type, and the second is the Amazon Machine Image (AMI) ID. |

| Output Format | A string representing the ID of the launched EC2 instance. |

Suggested Answer

| import boto3

def launch_ec2_instance(instance_type, image_id): ec2 = boto3.resource(‘ec2’) instances = ec2.create_instances( ImageId=image_id, InstanceType=instance_type, MinCount=1, MaxCount=1 ) return instances[0].id |

Code Explanation

The function launch_ec2_instance uses the Boto3 library to interact with AWS EC2. It creates an EC2 resource and then calls create_instances with the specified image ID and instance type. The MinCount and MaxCount parameters define the number of instances to launch, which is set to 1 in this case. The function returns the ID of the newly created instance.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question assesses a candidate’s practical skills in managing AWS resources and their ability to automate cloud operations, which are crucial for efficient cloud resource management and scalability in AWS environments. |

3. Read a File from S3 with Node.js

| Task | Create a Node.js AWS Lambda function to read an object from an S3 bucket and log its content. |

| Input Format | An event object containing details about the S3 bucket and the object key. |

| Output Format | The content of the file is logged to the console. |

Suggested Answer

| const AWS = require(‘aws-sdk’);

const s3 = new AWS.S3(); exports.handler = async (event) => { const params = { Bucket: event.Records[0].s3.bucket.name, Key: event.Records[0].s3.object.key }; const data = await s3.getObject(params).promise(); console.log(data.Body.toString()); }; |

Code Explanation

This function is an AWS Lambda handler written in Node.js. It utilizes the AWS SDK for JavaScript to interact with S3. The function is triggered by an event, which is structured to contain information about the S3 bucket and object. It then constructs parameters to identify the specific S3 object and uses the getObject method of the S3 service to retrieve the object.

The await syntax is used to handle the asynchronous nature of the operation. Finally, the content of the object is logged into the console.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question evaluates a candidate’s skills in integrating AWS services with serverless computing, specifically in handling event-driven functions in AWS Lambda. It’s a relevant and practical scenario in many modern cloud-based applications. |

4. Write to a DynamoDB Table

| Task | Create a Python function using Boto3 to add a new item to a DynamoDB table. |

| Input Format | Two strings: the first is the name of the DynamoDB table, and the second is a JSON string representing the item to be added. |

| Output Format | The output will be the response from the DynamoDB put operation. |

Suggested Answer

| import boto3

import json def add_item_to_dynamodb(table_name, item_json): dynamodb = boto3.resource(‘dynamodb’) table = dynamodb.Table(table_name) item = json.loads(item_json) response = table.put_item(Item=item) return response |

Code Explanation

This function, add_item_to_dynamodb, uses Boto3 to interact with Amazon DynamoDB. It starts by creating a DynamoDB resource and then accessing the specific table using the provided table name.

The item to be added is converted from a JSON string to a Python dictionary using json.loads. The put_item method is then used to add the item to the table. Finally, the function returns the response from the DynamoDB operation.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question assesses a candidate’s skills in integrating AWS database services into their applications, a crucial aspect of building scalable and dynamic cloud-based applications. It also tests their ability to manipulate data formats and manage database interactions programmatically. |

5. Delete an S3 Object

| Task | Create a Node.js function to delete an object from an S3 bucket. |

| Input Format | Two strings: the first is the name of the S3 bucket, and the second is the key of the object to be deleted. |

| Output Format | The output will be the response from the S3 delete operation. |

Suggested Answer

| const AWS = require(‘aws-sdk’);

const s3 = new AWS.S3(); async function delete_s3_object(bucket, key) { const params = { Bucket: bucket, Key: key }; const response = await s3.deleteObject(params).promise(); return response; } |

Code Explanation

The function delete_s3_object utilizes the AWS SDK for JavaScript in a Node.js environment. It begins by defining parameters that include the bucket name and the object key. The deleteObject method of the S3 service is then used to delete the specified object, and the operation is handled asynchronously using await.

The function returns the response from the delete operation, which can include details about the success or failure of the request.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question is critical for evaluating a candidate’s ability to handle cloud storage operations programmatically, a common requirement in managing large-scale, cloud-based applications. It also tests their capability to efficiently automate routine tasks, which is essential for effective cloud resource management. |

6. Automate Snapshot Creation for EC2 Instances

| Task | Write a Python function using Boto3 to automatically create a snapshot of a specified EC2 instance |

| Input Format | A string representing the ID of the EC2 instance for which the snapshot needs to be created. |

| Output Format | The output will be the ID of the created snapshot. |

Suggested Answer

| import boto3

def create_ec2_snapshot(instance_id): ec2 = boto3.client(‘ec2’) response = ec2.create_snapshot(InstanceId=instance_id) snapshot_id = response.get(‘SnapshotId’) return snapshot_id # Example usage snapshot_id = create_ec2_snapshot(‘i-1234567890abcdef0’) print(f’Snapshot created with ID: {snapshot_id}’) |

Code Explanation

This Python function, using Boto3, is designed to create a snapshot of a specified EC2 instance. It initializes an EC2 client and then calls the create_snapshot method with the provided instance ID.

The function extracts the snapshot ID from the response and returns it. This kind of automation is crucial in cloud computing for maintaining data integrity and ensuring backup procedures are efficient and consistent.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question effectively evaluates the candidate’s practical skills in using AWS for essential operations like backup and recovery, demonstrating their proficiency in automating and managing cloud resources. |

7. Configure a Load Balancer in AWS

| Task | Write a Python function using Boto3 to create and configure a new Elastic Load Balancer. |

| Input Format | The input will be two lists: the first list contains the IDs of the subnets where the load balancer should operate, and the second list contains the security group IDs to assign to the load balancer. |

| Output Format | The output will be the ARN (Amazon Resource Name) of the newly created load balancer. |

Suggested Answer

| import boto3

def create_load_balancer(subnets, security_groups): elb = boto3.client(‘elbv2’) response = elb.create_load_balancer( Name=’my-load-balancer’, Subnets=subnets, SecurityGroups=security_groups, Type=’application’ ) return response[‘LoadBalancers’][0][‘LoadBalancerArn’] # Example usage subnets = [‘subnet-abc123’, ‘subnet-def456’] security_groups = [‘sg-abc12345’] lb_arn = create_load_balancer(subnets, security_groups) print(f’Load Balancer ARN: {lb_arn}’) |

Code Explanation

This function demonstrates how to create an Elastic Load Balancer using Boto3 in Python. The function initializes an ELB client and creates a load balancer with the specified name, subnets, and security groups. The load balancer type is set to ‘application’. After creation, it retrieves and returns the ARN of the load balancer.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question assesses a candidate’s proficiency in handling crucial AWS services related to application scaling and distribution, a key aspect of cloud-based architecture and application deployment. |

Conclusion

The ability to craft and conduct effective AWS interviews is paramount for hiring managers. The seven AWS interview questions we’ve explored serve as a roadmap to uncovering a candidate’s practical skills, problem-solving abilities, and overall competence in AWS.

Key Takeaways for Hiring Managers

- Depth of Knowledge: The questions are designed to delve into the candidate’s understanding of AWS services and their ability to apply this knowledge in real-world scenarios.

- Practical Application: By focusing on tasks like file operations in S3, EC2 instance management, DynamoDB interactions, and load balancing configurations, these questions reveal the practical abilities of candidates beyond theoretical knowledge.

- Scalability and Innovation: The questions reflect scenarios that demonstrate a candidate’s aptitude for building scalable, efficient, and innovative solutions using AWS, which is crucial for any software development role.

We encourage hiring managers to leverage Interview Zen to design their AWS interviews. With our comprehensive suite of tools and resources, Interview Zen simplifies the process of creating, administering, and evaluating technical interviews.

Delve into the world of structured and effective technical interviews with Interview Zen – your partner in discovering the best AWS talent.

Don’t miss the opportunity to elevate your hiring practice to the next level. Try Interview Zen for your next round of technical interviews.

Read more articles: