Introduction

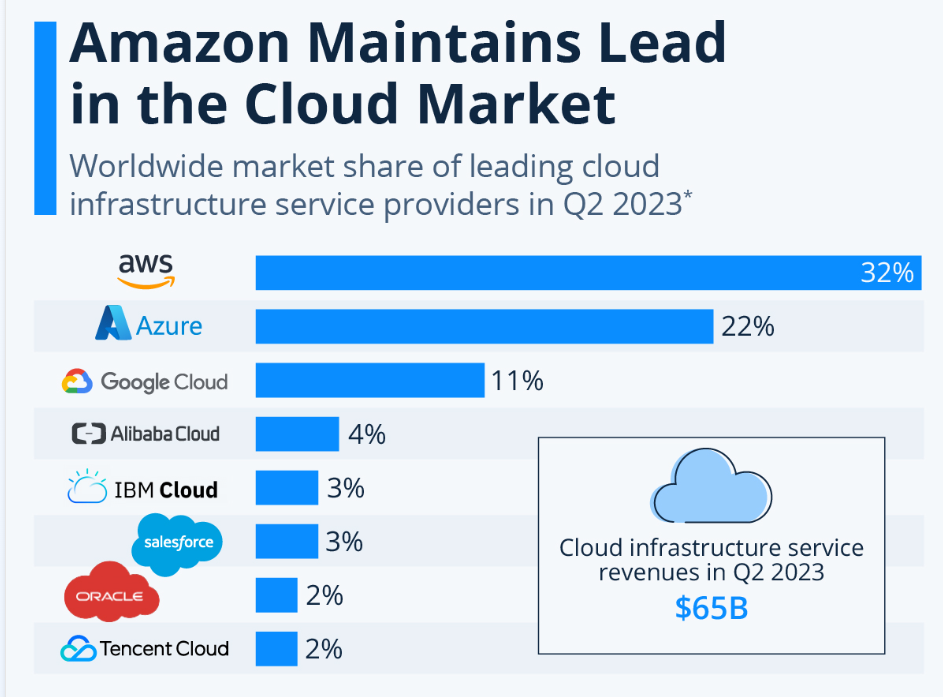

In today’s cloud computing, Microsoft Azure has emerged as an excellent cloud service provider, marking its presence alongside other major cloud service providers. According to Statista, Microsoft Azure holds the second-largest share at 20% and considered as second most popular cloud service in 2024.

Azure’s increasing relevance in the market reflects its comprehensive suite of services, including robust computing capabilities, sophisticated analytics, and versatile storage solutions. This statistic highlights Azure’s adaptability to diverse business needs and shows its importance as a skill set for software developers.

The goal of the article is to provide hiring managers with a structured guide to crafting effective Azure-related technical interviews. We will explore the top 7 Azure interview questions that every hiring manager should ask when interviewing a candidate.

What is Azure?

Microsoft Azure is a comprehensive cloud computing service created by Microsoft for building, testing, deploying, and managing applications and services through Microsoft-managed data centers. It provides various cloud services for computing, analytics, storage, and networking. Users can choose from these services to develop and scale new applications or run existing applications in the public cloud.

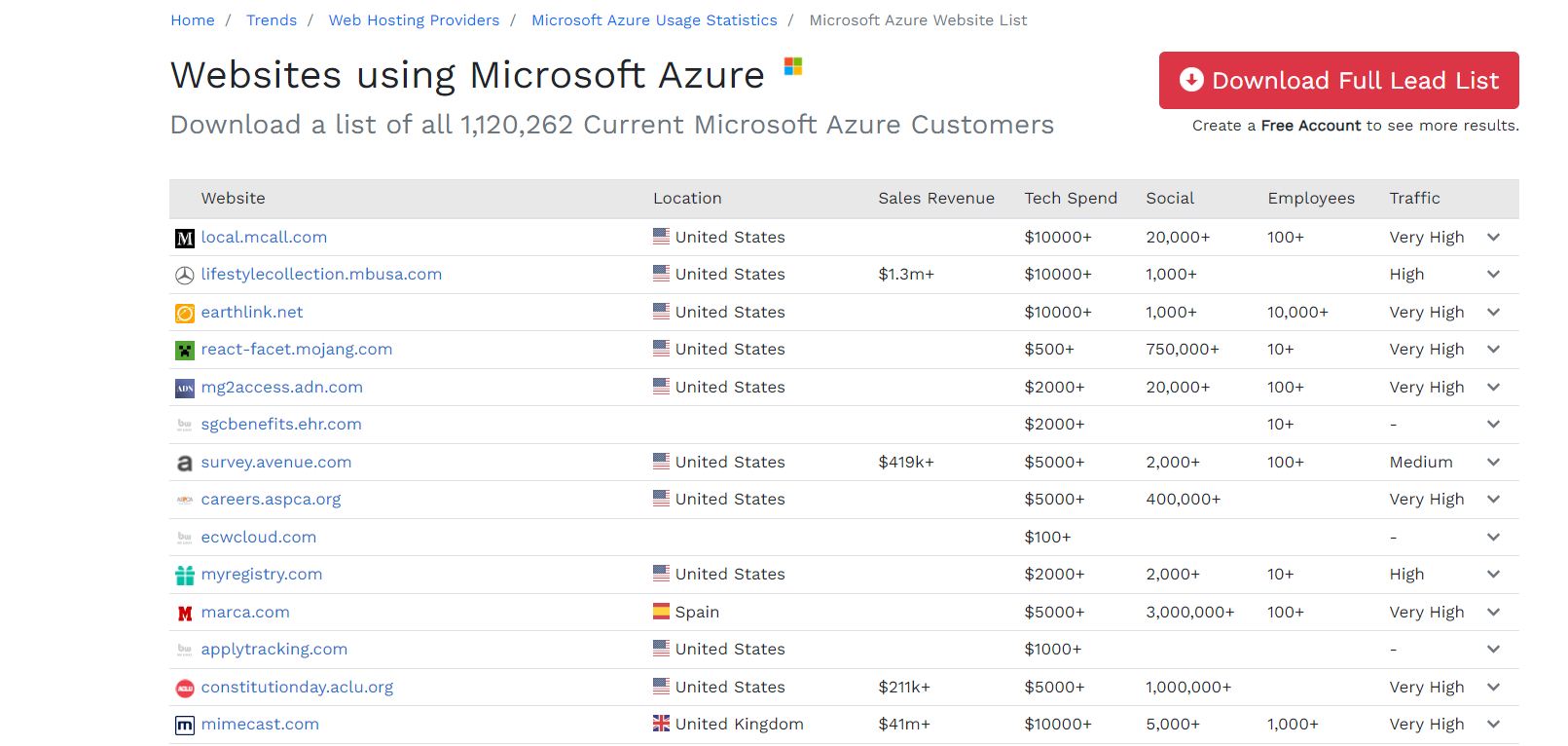

According to BuiltWith, 1.12 million live websites use Microsoft Azure’s cloud hosting services. This significant figure highlights Azure’s widespread adoption and reliability as a cloud service provider.

Key Features of Azure

- Diverse Range of Services: Azure offers various services, including but not limited to virtual machines, app services, databases, AI and machine learning, and many others. This diversity allows developers and businesses to tailor solutions specific to their needs.

- Flexibility and Scalability: With Azure, organizations can scale services to fit their needs, use the resources they require, and pay only for what they use, making it a cost-effective solution for businesses of all sizes.

- Integrated Development Environment: Azure seamlessly integrates with various tools and programming languages, offering developers a familiar and productive environment. This integration streamlines the development process and supports various application types.

- Global Network: Azure operates in numerous data centers around the globe, ensuring reliable and high-performance cloud services regardless of location. This global presence is essential for businesses looking to operate internationally.

- Security and Compliance: Azure is committed to providing a secure and compliant environment with industry-leading security measures and compliance protocols. This commitment ensures businesses can trust Azure to handle sensitive data and critical applications.

- Hybrid Capabilities: Azure supports hybrid cloud configurations, allowing businesses to keep some data and applications in private servers while utilizing the public cloud for others. This flexibility is critical for businesses with specific security or operational requirements.

Azure’s comprehensive services, scalability, security, and global reach make it a powerful tool for businesses and developers alike, facilitating innovation and digital transformation across industries. Whether it’s simple cloud storage or building complex enterprise-grade solutions, Azure offers the infrastructure and tools to meet a wide range of needs.

Popular Companies Using Azure Cloud Service

Microsoft Azure stands as an important cloud service provider in the cloud computing industry, not just for individual and business applications but also for its significant adoption by the world’s leading corporations.

According to Microsoft, more than 95% of Fortune 500 companies use Azure cloud services. This statistic shows the trust and reliance these major companies place on Azure, highlighting its strength in providing scalable, secure, and comprehensive cloud solutions.

Following are some popular companies using Azure cloud services:

- Walmart: The retail giant uses Azure for a wide range of cloud solutions, including managing certain aspects of its vast supply chain and customer service technologies.

- eBay: eBay utilizes Azure for its artificial intelligence, machine learning, and data solutions to enhance its e-commerce platform’s capabilities.

- BMW: BMW leverages Azure’s cloud capabilities for its BMW ConnectedDrive service, providing an enhanced digital experience for its customers.

- Boeing: The aerospace company uses Azure to streamline its operations, including analytics, AI, and machine learning tools to improve performance and efficiency.

- Adobe: Adobe and Microsoft have partnered to offer Adobe’s Marketing Cloud solutions on Azure, enhancing the digital marketing and e-commerce framework.

- Samsung: Samsung uses Azure for various purposes, including its smart appliance ecosystem and other IoT solutions.

- FedEx: Azure supports FedEx with cloud solutions for analytics, customer intelligence, and logistics management.

Top 7 Azure Interview Questions

Let’s explore the top 7 Azure interview questions:

1. Deploy a Web App Using Azure CLI

| Task | Write an Azure CLI script to deploy a simple web application using Azure App Service. The script must create the necessary resources, deploy a sample HTML file, and output the public URL of the web application. |

| Input Format | The script accepts four parameters:

|

| Constraints |

|

| Output Format | The script outputs the public URL of the deployed web app. |

Suggested Answer

| #!/bin/bash

# Parameters resourceGroupName=$1 location=$2 appServicePlanName=$3 webAppName=$4 # Create a resource group az group create –name $resourceGroupName –location $location # Create an App Service plan on Free tier az appservice plan create –name $appServicePlanName –resource-group $resourceGroupName –sku F1 –is-linux # Create a web app az webapp create –name $webAppName –resource-group $resourceGroupName –plan $appServicePlanName –runtime “NODE|14-lts” # Deploy sample HTML file echo “<html><body><h1>Hello Azure!</h1></body></html>” > index.html az webapp up –resource-group $resourceGroupName –name $webAppName –html # Print the public URL echo “Web app deployed at: https://$webAppName.azurewebsites.net” |

Code Explanation

The script systematically establishes the Azure infrastructure to host a web app. It creates a resource group, an App Service plan on the free tier, and a web app. Then, it deploys an HTML file to this web app and outputs the URL where the web app is hosted.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question is crucial for evaluating a candidate’s practical skills in deploying applications in Azure, showcasing their proficiency in using Azure CLI, and understanding Azure’s web hosting services. |

2. Configure Azure Blob Storage and Upload a File

| Task | Write a Python script using Azure SDK to create a container in Azure Blob Storage and upload a file to this container. |

| Input Format | The script accepts three parameters:

|

| Constraints |

|

| Output Format | Print the URL of the uploaded blob. |

Suggested Answer

| from azure.storage.blob import BlobServiceClient, BlobClient, ContainerClient

def upload_to_blob(connection_string, container_name, file_path): try: # Create the BlobServiceClient blob_service_client = BlobServiceClient.from_connection_string(connection_string) # Create or get container container_client = blob_service_client.get_container_client(container_name) if not container_client.exists(): blob_service_client.create_container(container_name, public_access=’blob’) # Upload file to blob blob_client = blob_service_client.get_blob_client(container=container_name, blob=file_path.split(‘/’)[-1]) with open(file_path, “rb”) as data: blob_client.upload_blob(data) print(f”File uploaded to: {blob_client.url}”) except Exception as e: print(f”An error occurred: {e}”) # Sample Usage # upload_to_blob(‘<Your Connection String>’, ‘sample-container’, ‘path/to/file.txt’) |

Code Explanation

The script utilizes the Azure SDK for Python. It starts by creating a BlobServiceClient with the given connection string. The script then either retrieves or creates the specified container with the required access level.

Next, it reads the specified file as binary data and uploads it to the blob storage. After a successful upload, the blob’s URL is printed. The script includes exception handling to capture and report errors like invalid input.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question assesses the candidate’s proficiency in handling Azure cloud storage solutions, particularly Azure Blob Storage, and their capability to automate cloud storage tasks using Python. |

3. Azure Functions: HTTP Trigger with Cosmos DB Integration

| Task | Create an Azure Function triggered by an HTTP GET request that retrieves a document from Azure Cosmos DB using a provided ID and returns the document as a JSON response. |

| Input Format | An HTTP GET request with a query parameter named docId, representing the ID of the document to be retrieved. |

| Constraints |

|

| Output Format | The requested document in JSON format or an error message if the document isn’t found. |

Suggested Answer

| using System.IO;

using Microsoft.AspNetCore.Mvc; using Microsoft.Azure.WebJobs; using Microsoft.Azure.WebJobs.Extensions.Http; using Microsoft.AspNetCore.Http; using Microsoft.Extensions.Logging; using Newtonsoft.Json; using Microsoft.Azure.Documents.Client; using Microsoft.Azure.Documents.Linq; using System.Linq; public static class GetDocumentFunction { [FunctionName(“RetrieveDocument”)] public static IActionResult Run( [HttpTrigger(AuthorizationLevel.Function, “get”, Route = null)] HttpRequest req, [CosmosDB( databaseName: “MyDatabase”, collectionName: “MyContainer”, ConnectionStringSetting = “AzureWebJobsCosmosDBConnectionString”, Id = “{Query.docId}”)] dynamic document, ILogger log) { log.LogInformation(“C# HTTP trigger function processed a request.”); if (document == null) { return new NotFoundObjectResult(“Document not found.”); } return new OkObjectResult(document); } } |

Code Explanation

The provided C# Azure Function is set up to be triggered by an HTTP GET request. It employs a CosmosDB input binding to fetch a document from a specified Cosmos DB container directly based on the docId query parameter. If the document is found, it is returned in JSON format.

If not, a 404 Not Found response is given, along with a message indicating that the document was not found.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question assesses the candidate’s capability to build serverless applications that interact with database services, a critical aspect of modern cloud-based application development. It tests both their technical knowledge of Azure services and their practical ability to implement these services in a real-world scenario. |

4. Azure Virtual Machine: Automate VM Setup with Azure SDK for Python

| Task | Write a Python script using Azure SDK to create a new virtual machine (VM) that runs Ubuntu Server 18.04 LTS and automatically installs Docker upon setup. |

| Input Format | The script accepts the following parameters:

|

| Constraints |

|

| Output Format | Print the public IP address of the created VM. |

Suggested Answer

| from azure.identity import ClientSecretCredential

from azure.mgmt.compute import ComputeManagementClient from azure.mgmt.network import NetworkManagementClient from azure.mgmt.resource import ResourceManagementClient def create_vm_with_docker(resource_group, vm_name, location, subscription_id, client_id, client_secret, tenant_id): # Authenticate using service principal credential = ClientSecretCredential(client_id=client_id, client_secret=client_secret, tenant_id=tenant_id) # Initialize management clients resource_client = ResourceManagementClient(credential, subscription_id) compute_client = ComputeManagementClient(credential, subscription_id) network_client = NetworkManagementClient(credential, subscription_id) # Assuming network setup, storage, etc. are in place # Fetch SSH public key with open(“~/.ssh/id_rsa.pub”, “r”) as f: ssh_key = f.read().strip() # Define VM parameters, including post-deployment script to install Docker vm_parameters = { #… various VM parameters like size, OS type, etc. ‘osProfile’: { ‘computerName’: vm_name, ‘adminUsername’: ‘azureuser’, ‘linuxConfiguration’: { ‘disablePasswordAuthentication’: True, ‘ssh’: { ‘publicKeys’: [{ ‘path’: ‘/home/azureuser/.ssh/authorized_keys’, ‘keyData’: ssh_key }] } }, ‘customData’: “IyEvYmluL2Jhc2gKc3VkbyBhcHQtZ2V0IHVwZGF0ZSAmJiBzdWRvIGFwdC1nZXQgaW5zdGFsbCAt eSBkb2NrZXIuY2U=” # Base64 encoded script for “sudo apt-get update && sudo apt-get install -y docker.ce” } } # Create VM creation_poller = compute_client.virtual_machines.create_or_update(resource_group, vm_name, vm_parameters) creation_poller.result() # Print the public IP address (assuming IP is already allocated) public_ip = network_client.public_ip_addresses.get(resource_group, f”{vm_name}-ip”) print(f”Virtual Machine available at: {public_ip.ip_address}”) # Sample Usage # create_vm_with_docker(‘<Resource Group Name>’, ‘<VM Name>’, ‘<Location>’, ‘<Subscription ID>’, ‘<Client ID>’, ‘<Client Secret>’, ‘<Tenant ID>’) |

Code Explanation

The script sets up authentication with Azure using service principal credentials. It initializes clients for managing resources, computing, and networking operations in Azure. The VM is defined with Ubuntu Server 18.04 LTS, SSH key authentication, and a post-deployment script to install Docker. The script also assumes prior setup of network and storage resources. Once the VM is created, the script retrieves and prints its public IP address.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question evaluates the candidate’s ability to create and configure Azure VMs programmatically, a crucial skill for efficient cloud resource management and automation. It also tests their understanding of the integration between various Azure services. |

5. Azure SQL Database: Data Migration and Querying

| Task | Write a Python script to connect to an Azure SQL Database, migrate data from a CSV file to a table, and run a query on the table. |

| Input Format | The script should accept command-line arguments in this order:

|

| Constraints |

|

| Output Format | Print a success message post data migration and display the results of the SQL query in a readable format. |

Suggested Answer

| import pyodbc

import csv import sys def migrate_and_query_data(conn_string, csv_path, sql_query): try: # Connect to Azure SQL Database conn = pyodbc.connect(conn_string) cursor = conn.cursor() # Migrate CSV data with open(csv_path, ‘r’) as file: reader = csv.DictReader(file) for row in reader: columns = ‘, ‘.join(row.keys()) placeholders = ‘, ‘.join(‘?’ for _ in row) query = f”INSERT INTO target_table ({columns}) VALUES ({placeholders})” cursor.execute(query, list(row.values())) print(“Data migration successful!”) # Execute SQL query and display results cursor.execute(sql_query) for row in cursor.fetchall(): print(row) conn.close() except pyodbc.Error as e: print(f”Database error: {e}”) except Exception as e: print(f”An error occurred: {e}”) # Sample usage (with parameters replaced appropriately) # migrate_and_query_data(sys.argv[1], sys.argv[2], sys.argv[3]) |

Code Explanation

The script uses pyodbc to establish a connection with the Azure SQL Database. It reads the CSV file and inserts each row into the target table in the database. After successful data migration, the script executes the provided SQL query and prints the fetched data. Exception handling is included to manage database errors and other potential issues.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question evaluates the candidate’s skills in database management, particularly in Azure SQL, and their capability to automate data-centric tasks using Python. It also tests their practical knowledge in handling real-world data integration and manipulation scenarios. |

6. Azure Logic Apps with ARM Templates: Automated Data Sync

| Task | Implement an Azure Logic App using an ARM template to automate data syncing between two Azure Table Storage accounts. |

| Input Format |

|

| Constraints |

|

Suggested Answer (ARM Template)

| {

“$schema”: “https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#”, “contentVersion”: “1.0.0.0”, “resources”: [ { “type”: “Microsoft.Logic/workflows”, “apiVersion”: “2017-07-01”, “name”: “SyncAzureTablesLogicApp”, “location”: “[resourceGroup().location]”, “properties”: { “definition”: { “$schema”: “…”, “contentVersion”: “…”, “triggers”: { “When_item_is_added”: { “type”: “ApiConnection”, // Additional trigger details } }, “actions”: { “Add_item_to_destination”: { “type”: “ApiConnection”, // Additional action details } } }, “parameters”: { … } } } ], “outputs”: { … } } |

Code Explanation

The ARM template is a structured JSON file that defines an Azure Logic App resource for syncing data between two Azure Table Storage accounts. It specifies the app’s location, trigger (activated upon a new item addition in the source table), and actions (to add an item to the destination table). This approach aligns with DevOps practices, allowing automation, version control, and consistency across deployment environments.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests |

This question assesses a candidate’s skill in understanding and implementing Azure Logic Apps and proficiency in using ARM templates for defining cloud resources and workflows. It reflects the candidate’s capability to manage complex cloud integrations and automate processes in a cloud environment. |

7. Implementing a Secure Azure Key Vault Backup Strategy

| Task | Write a Python script that automates the backup process of secrets stored in Azure Key Vault. The script should securely connect to an Azure Key Vault, retrieve all secrets, and save them in an encrypted backup file. |

| Input Format | The script should accept:

|

| Constraints |

|

| Output Format |

|

Suggested Answer

| from azure.identity import ClientSecretCredential

from azure.keyvault.secrets import SecretClient import json import cryptography def backup_key_vault_secrets(vault_name, client_id, tenant_id, client_secret, backup_file_path): try: # Authentication credential = ClientSecretCredential(tenant_id=tenant_id, client_id=client_id, client_secret=client_secret) client = SecretClient(vault_url=f”https://{vault_name}.vault.azure.net/”, credential=credential) # Retrieve secrets backup_data = {secret.name: client.get_secret(secret.name).value for secret in client.list_properties_of_secrets()} # Encrypt and save backup data # [Encryption process here] with open(backup_file_path, “wb”) as file: file.write(encrypted_backup_data) print(f”Backup completed successfully. File saved at: {backup_file_path}”) except Exception as e: print(f”An error occurred: {e}”) # Sample Usage # backup_key_vault_secrets(‘<vault-name>’, ‘<client-id>’, ‘<tenant-id>’, ‘<client-secret>’, ‘path/to/backup/file’) |

Code Explanation

The script uses the Azure SDK for Python to authenticate and connect to Azure Key Vault. It retrieves all secrets and stores them in a dictionary. The secrets are then encrypted (using a chosen encryption method) and written to the specified backup file. The script includes error handling for authentication issues and file operations.

| Common Mistakes to Watch Out For |

|

| Follow-ups |

|

| What the Question Tests | This question examines the developer’s ability to interact with Azure Key Vault using the Azure SDK for Python, emphasizing secure practices in handling sensitive data. It also tests skills in automating backup processes and implementing basic encryption for data security. |

Conclusion

In the evolving landscape of cloud computing, where Azure stands as an important platform, the ability to identify and evaluate Azure-specific skills is crucial for hiring managers. The essence of these top 7 Azure interview questions lies in their targeted approach. Each question is designed to examine the depth of a candidate’s knowledge and practical application skills in real-world Azure scenarios.

Key Takeaways

- Comprehensiveness: These questions cover a broad spectrum of Azure services, ensuring a well-rounded assessment of a candidate’s expertise.

- Practicality: The focus on real-world tasks demonstrates how candidates would handle typical Azure-related challenges they might encounter in the role.

- Depth of Understanding: The questions delve into the candidate’s ability to use Azure services and integrate them effectively, reflecting their problem-solving and critical-thinking capabilities.

We encourage hiring managers and recruiters to leverage Interview Zen for their Azure interview needs. Whether you’re building a new interview process or refining an existing one, Interview Zen offers the resources and support needed to conduct thorough and insightful Azure assessments.

Utilize Interview Zen to ensure that your candidates are evaluated comprehensively, allowing you to identify and recruit the best Azure talent in the industry. Explore Interview Zen today and elevate your Azure hiring process to the next level.

Don’t miss the opportunity to elevate your hiring practice to the next level. Try Interview Zen for your next round of technical interviews.

Read more articles: